TL;DR

Unix Sockets are much faster than TCP loopbacks, use them for Kubernetes sidecars.

The Premise

So you’ve decided to sidecar a service in your pod. Maybe it’s Nginx or ProxySQL or a custom microservice you’ve written. You’ve likely done this for speed related reasons; if so, shouldn’t you get the most speed out of this colocation as possible? I’m going to show you how to really speed up your network communications by using a Unix Socket instead of a TCP loopback.

Testing the Premise

I’ve written some example go server and client code that we can just execute anywhere to see how much faster Unix Sockets are than a TCP loopback.

Here’s our basic TCP Server:

package main

import (

“log”

“net”

)

func main() {

l, err := net.Listen(“tcp4”, “127.0.0.1:8080”)

if err != nil {

log.Fatal(err)

return

}

defer l.Close()

for {

c, err := l.Accept()

if err != nil {

log.Fatal(err)

return

}

go handleConnection(c)

}

}

func handleConnection(c net.Conn) {

c.Write([]byte(string(“test\n“)))

c.Close()

}

The only changes we need to make to cause this to use Unix Sockets are here.

This:

Becomes this:

But now we’ve got a pesky SockFile hanging around after execution, so let’s also add:

// Cleanup the sockfile on ctrl+c

c := make(chan os.Signal)

signal.Notify(c, os.Interrupt, syscall.SIGTERM)

go func() {

<-c

os.Remove(“/tmp/test.sock”)

os.Exit(1)

}()

Here’s our TCP client:

package main

import (

“bufio”

“log”

“net”

“os”

“runtime/pprof”

“time”

)

func main() {

start := time.Now()

for i := 0; i < 10000; i++ {

conn, err := net.Dial(“tcp”, “127.0.0.1:8080”)

if err != nil {

log.Fatal(err)

return

}

_, err = bufio.NewReader(conn).ReadString(‘\n’)

if err != nil {

log.Fatal(err)

return

}

conn.Close()

}

t := time.Now()

elapsed := t.Sub(start)

log.Printf(“Time taken %s\n“, elapsed)

}

And just change this:

For this:

For our Unix Sockets client.

Benchmarks

And the results:

% go run tcpclient.go

2021/07/21 15:20:08 Time taken 1.681643416s

% go run socketclient.go

2021/07/21 15:20:19 Time taken 656.821252ms

That’s about 60% faster. So what’s going on here? Why is the Unix Socket so much faster?

Let’s add this profiling code to our clients to see what’s going on under the hood:

f, err := os.Create(“tcp.prof”)

if err != nil {

log.Fatal(err)

}

pprof.StartCPUProfile(f)

defer pprof.StopCPUProfile()

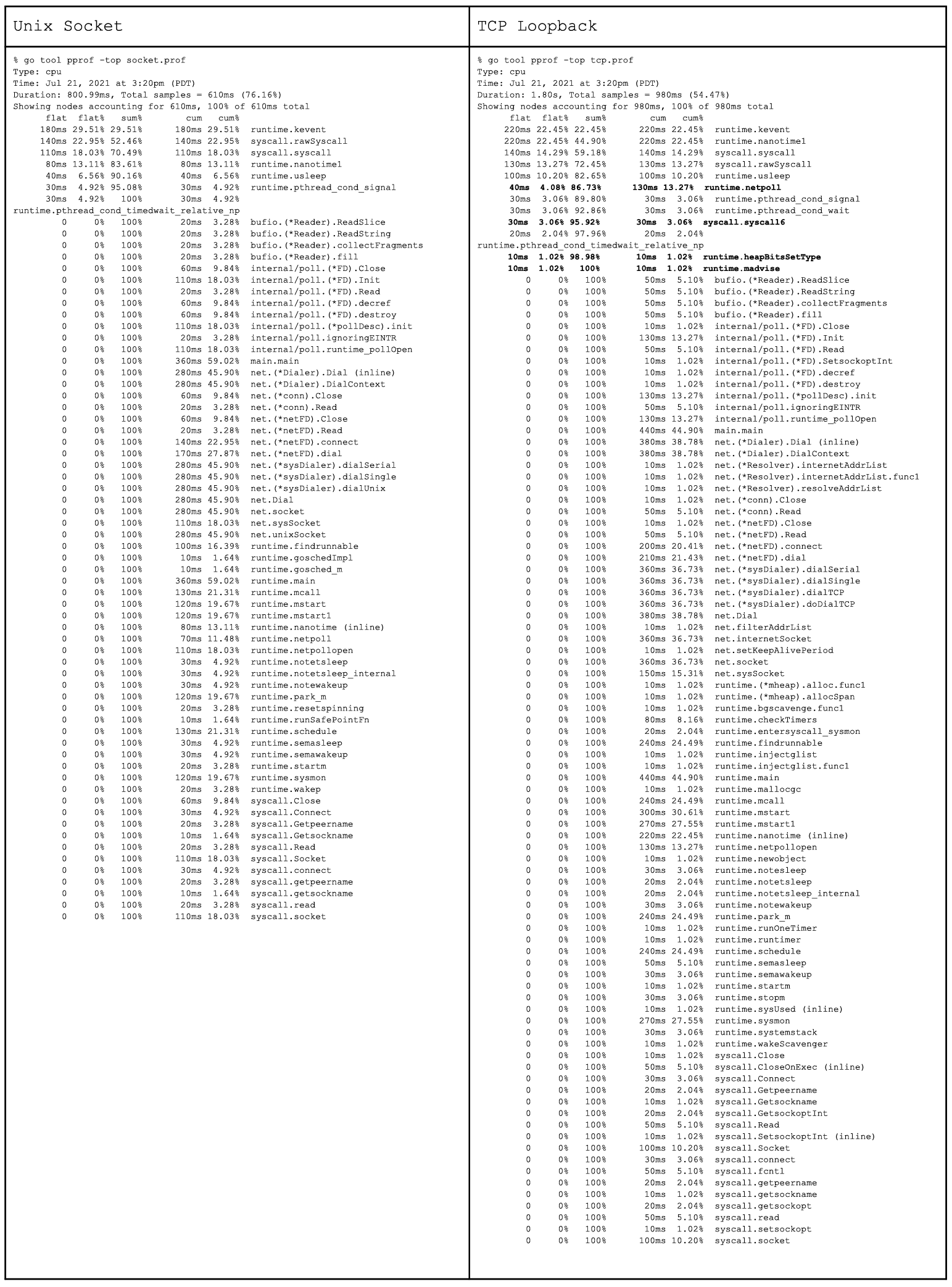

Let’s look at the profile now:

% go tool pprof tcp.prof

Type: cpu

Time: Jul 21, 2021 at 3:20pm (PDT)

Duration: 1.80s, Total samples = 980ms (54.47%)

Entering interactive mode (type “help” for commands, “o” for options)

(pprof) list main.main

Total: 980ms

ROUTINE ======================== main.main in

tcpclient.go

0 440ms (flat, cum) 44.90% of Total

. . 17: pprof.StartCPUProfile(f)

. . 18: defer pprof.StopCPUProfile()

. . 19:

. . 20: start := time.Now()

. . 21: for i := 0; i < 10000; i++ {

. 380ms 22: conn, err := net.Dial(“tcp”, “127.0.0.1:8080”)

. . 23: if err != nil {

. . 24: log.Fatal(err)

. . 25: return

. . 26: }

. 50ms 27: _, err = bufio.NewReader(conn).ReadString(‘\n’)

. . 28: if err != nil {

. . 29: log.Fatal(err)

. . 30: return

. . 31: }

. 10ms 32: conn.Close()

. . 33: }

. . 34: t := time.Now()

. . 35: elapsed := t.Sub(start)

. . 36: log.Printf(“Time taken %s\n”, elapsed)

. . 37:}

(pprof) exit

% go tool pprof socket.prof

Type: cpu

Time: Jul 21, 2021 at 3:20pm (PDT)

Duration: 800.99ms, Total samples = 610ms (76.16%)

Entering interactive mode (type “help” for commands, “o” for options)

(pprof) list main.main

Total: 610ms

ROUTINE ======================== main.main in

socketclient.go

0 360ms (flat, cum) 59.02% of Total

. . 17: pprof.StartCPUProfile(f)

. . 18: defer pprof.StopCPUProfile()

. . 19:

. . 20: start := time.Now()

. . 21: for i := 0; i < 10000; i++ {

. 280ms 22: conn, err := net.Dial(“unix”, “/tmp/test.sock”)

. . 23: if err != nil {

. . 24: log.Fatal(err)

. . 25: return

. . 26: }

. 20ms 27: _, err = bufio.NewReader(conn).ReadString(‘\n’)

. . 28: if err != nil {

. . 29: log.Fatal(err)

. . 30: return

. . 31: }

. 60ms 32: conn.Close()

. . 33: }

. . 34: t := time.Now()

. . 35: elapsed := t.Sub(start)

. . 36: log.Printf(“Time taken %s\n”, elapsed)

. . 37:}

Opening the connections and reading from it are significantly faster with a Unix Socket, while closing the TCP connection is much faster. Let’s take a look at top for each:

We can see, when you use a Unix Socket, you’re just asking your system to do a lot less work.

Side note, when I first wrote the above TCP client, not thinking, I used ‘localhost’ instead of ‘127.0.0.1’. Of course that’s slower, because it has to do a name lookup, but by how much shocked me. To complete this same test, it took over 50 seconds instead of 1.6 seconds. Obviously a properly written client would just resolve localhost once before the loop, but how easy is that to overlook?

So what’s this have to do with kubernetes sidecars again? The answer is simple. You’re going to want to mount an empty volume between the two containers you’ve co-located in the same pod, and communicate via a Unix Socket instead of TCP. Most services like Nginx can read from or listen on a Unix Socket. Additionally, updating your code to support talking over a Unix Socket will likely be easy.

Caveats

Do keep in mind that the number of open SocketFiles is governed by your open filehandle limits. By default, for a non-root user, that’s going to be 1024 for most Linux distros, so you may need to bump that up depending on what you’re doing.

In Summation

So we’ve seen that using a Unix Socket can be 60% faster than a TCP loopback. We saw that it is faster because it’s less work to communicate over a Unix Socket. So that also means less system load. We’ve also seen that it’s pretty easy to convert to using a Unix Socket as well. Another thing we already knew, but had it underlined is that resolving DNS is really slow. Now go forth and speed up your kubernetes sidecars.